Is My RAG System Over-Engineered? I Tested It.

Published:

Is My RAG System Over-Engineered? I Tested It.

If you’re building with Large Language Models, you’ve probably heard of Retrieval-Augmented Generation, or RAG. It’s the go-to technique for making LLMs smarter by connecting them to your own data, helping them give factual, domain-specific answers. The common wisdom in the developer community is that to get the best results, you need to pull out all the stops: complex data chunking methods, sophisticated retrieval techniques, and multiple layers of reranking.

But what if that’s not just overkill, but actually counterproductive?

I got curious about the trade-offs between theoretical complexity and real-world, practical performance. Does a “state-of-the-art” retrieval pipeline actually deliver a better user experience, or does it just slow things down? To find out, I built a RAG system using Groq’s fast LLM framework and put four different retrieval strategies to the test, from the simplest to the most complex. The results might surprise you.

My Experiment

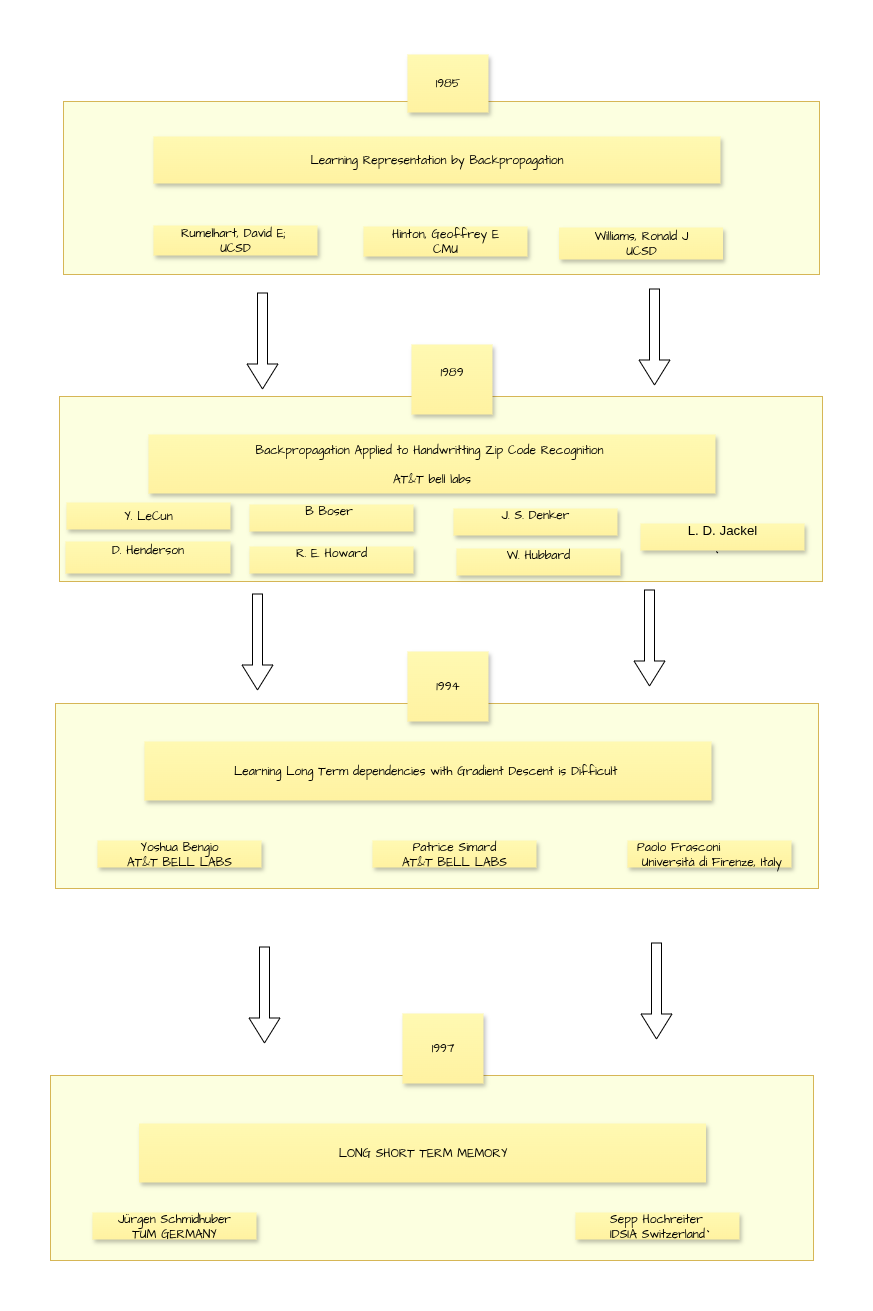

I set up a straightforward RAG pipeline to answer questions about machine learning. For the context, I fed it Wikipedia articles and academic papers, and then I asked it questions like, “What is machine learning?” and “How is a ResNet better than a CNN?”

Here were the four contestants in my retrieval showdown:

- Just the Keywords (BM25): The old-school, reliable keyword search. It’s incredibly fast and great at finding exact phrases.

- Just the Meaning (Semantic Search): This method uses vector embeddings to find documents that are conceptually similar to the query, even if they don’t use the same words.

- The Best of Both Worlds (Hybrid Search): I combined keyword and semantic search using a clever technique called Reciprocal Rank Fusion (RRF). I hoped this would give me the precision of keyword search with the contextual power of semantic search.

- The Perfectionist (Hybrid Search + Reranker): On top of my hybrid search, I added a cross-encoder reranker. A reranker takes the top results from the initial search and does a much deeper, more computationally expensive analysis to figure out the absolute best matches.

My goal was to measure two things: speed (latency) and typical answer quality derived from the retrieved context.

The Results Are In: Speed Trumps Complexity

My experiment painted a clear picture—and it shattered a few common myths. The difference between the four strategies was far from marginal:

| Strategy | Avg. Retrieval Time | Typical Answer Quality | Insight |

|---|---|---|---|

| BM25 Only | ⚡ ~0.5–1s | Often partial/incomplete | Fastest, but too shallow for complex concepts. |

| Semantic Only | ⚡ ~1–2s | Consistent, context-rich | Strong for finding similar meaning. |

| Hybrid (Semantic + BM25) | ⚙️ ~2–3s | Best balance of speed + coverage | Fused results were more complete and multi-source. |

| Hybrid + Reranking | 🐢 ~9–13s | Most accurate, longest responses | Latency is unacceptable for real-time use. |

Myth #1: Hybrid Search is a Watered-Down Compromise

The Verdict: Busted. Far from being a compromise, I found hybrid search was the clear winner for practical, production-ready applications.

By combining vector meaning with keyword precision via Reciprocal Rank Fusion, the Hybrid retrieval returned the most complete, multi-source answers—and did so in a swift 2–3 seconds. It offered the best balance of retrieval depth, diversity, and speed. For most use cases, this is the sweet spot: reliable, diverse context without the long wait.

Myth #2: Reranking is Always Worth It for the Best Quality

The Verdict: Busted. While the reranker strategy delivered the “most accurate” and longest responses, the computational cost was dramatic. The retrieval latency jumped from a respectable 2–3 seconds to a staggering 9–13 seconds.

My data shows that while a cross-encoder can indeed boost precision, its overhead caused an approximate 461x slowdown over the simple keyword approach. For any interactive application, this high latency is a deal-breaker. A reranker might be a valuable tool for offline tasks or evaluation, but for a real-time system, I confirmed it’s a performance killer.

Myth #3: More Complex Data Chunking is Always Better

The Verdict: Busted. The internet is full of articles advocating for “agentic chunking” or other advanced, multi-step strategies.

For my experiment, I stuck with a simple RecursiveCharacterTextSplitter, or what’s often called “simple semantic chunking.” This fast, predictable approach provided high-quality, relevant context for the LLM without any of the overhead or cost of more complex methods. It reinforced for me that sometimes, the simplest, most scalable solution is the most effective in a production environment.

My Key Takeaways for RAG Builders

My experiment proved that the pursuit of perfect relevance must be balanced against the realities of latency and scalability.

- Embrace Hybrid Search: It’s not a compromise; it’s the most robust and efficient way to retrieve information. Combining keyword precision with semantic context gives you a richer, faster, and more reliable foundation for your LLM to work with.

- Use Rerankers with Extreme Caution: While they can enhance relevance, the massive latency they introduce makes them unsuitable for most real-time applications. Save them for offline processes where speed isn’t a critical factor.

- Simplicity Outperforms Complexity: Don’t get caught up in the hype of overly complex chunking and retrieval strategies. A straightforward approach using simple semantic chunking and an efficient Hybrid setup is often more than enough to build a fast, scalable, and effective RAG system.

The “perfect” RAG pipeline isn’t the one with the biggest model or fanciest reranker—it’s the one that harmonizes performance with practicality. P.S. Everything in this experiment was run on my local system setup.