How AI Turns Noise into Art

Published:

Welcome to the wonderfully wacky world of AI image generation, where noise isn’t just unwanted static—it’s the secret sauce that transforms raw data into breathtaking visuals.

If you’ve ever wondered how an algorithm goes from a garbled mess of pixels to a photorealistic masterpiece, buckle up: we’re about to diffuse some serious knowledge!

From Chaos to Clarity: The Diffusion Process

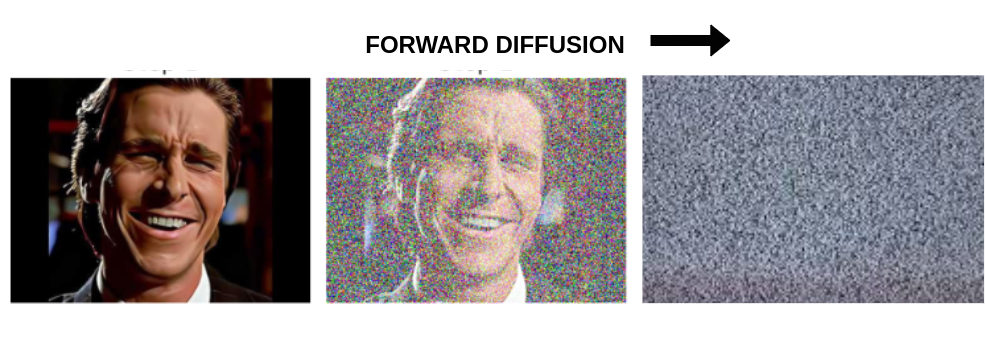

Imagine starting with a pristine image, then gradually blurring the lines—literally—until all you have left is noise. That’s the forward diffusion process in a nutshell. Here’s how it works:

Input Image: The journey begins with your high-resolution, crystal-clear image.

Iterative Noise Addition: Noise is added in small, controlled increments. Think of it as seasoning your data; a little bit at a time, following a precise recipe.

Pattern Recognition: As noise accumulates, the model starts to recognize patterns, much like how you begin to see shapes in the clouds.

Variance Scheduling: This step is all about balance—using parameters like βmin, βmax, and a linspace function to determine how much noise is added at each step. It’s like the Goldilocks principle for AI: not too little, not too much, just right.

Once the image is thoroughly “noised-up,” the magic of reverse diffusion begins.

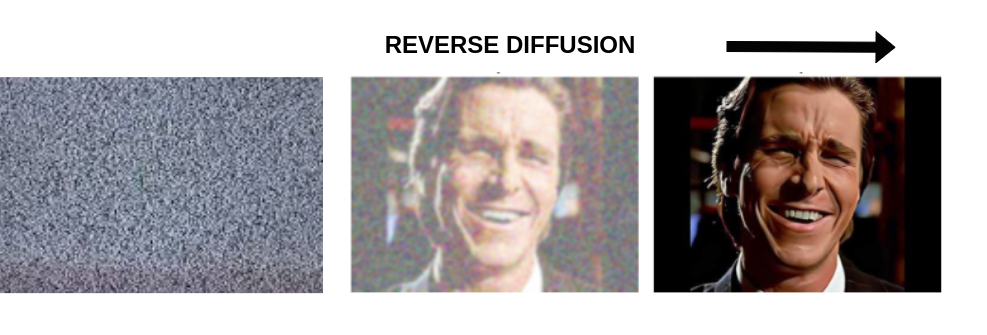

Reversing the Mayhem: Reverse Diffusion Now that we’ve thrown our image into a chaotic blender, it’s time to reconstruct the masterpiece from the mess. The reverse diffusion process is where the model flexes its muscle: Reconstruction: Starting from the noisy version, the model gradually peels away the noise. It’s akin to watching a sculpture emerge from a block of marble. Stochastic Generation: The image is regenerated through a series of denoising steps, ultimately yielding a clear and coherent picture. In short, the AI is the ultimate fixer-upper, turning pixelated pandemonium into art.

``` Under the Hood: The Neural Network Blueprint

- What powers this diffusive wizardry?

A well-designed neural network architecture that would make any computer scientist swoon: Residual Blocks

What’s Cooking?

Each residual block is like a mini assembly line: GroupNorm → Swish Layer → Convolution → GroupNorm → Swish → Convolution → Addition

These layers work together to refine the image iteratively, ensuring that even as noise is introduced, the essence of the image is not lost.

Attention Blocks

Focusing on Details

At resolutions like 16×16 and 8×8 pixels, the network deploys attention blocks to hone in on crucial correlations:

Flatten → Self-Attention → Addition This mechanism allows the model to “pay attention” to different parts of the image simultaneously, much like a tech-savvy conductor orchestrating a symphony of pixels.

The network repeatedly :

- downsamples the image to a lower resolution to process it

- upsamples it back to its original glory

Custom layers like SpatialFlattenLayer and SpatialUnflattenLayer ensure that the transformation from 2D to 1D (and back again) is as smooth as your favorite playlist.

Diffusion-Based Filtering: (Smoothing Out the Rough Edges)

Beyond just generating images, diffusion-based filtering plays a crucial role in smoothing image data removing noise without erasing those all-important edges and high-frequency details. This technique relies on solving a diffusion process partial differential equation (PDE) to create a series of progressively blurred images, ensuring that the essential features of the image remain intact.

From Text to Image: The DALL-E & GLIDE Saga

Enter DALL-E and its trusty sidekick, GLIDE—AI models that take textual prompts and turn them into visual art. Here’s how they work their magic:

- Text Prompt Input: A carefully crafted description is fed into a text encoder that maps it to a representation space.

- The Prior Model: Next, a model (aptly named “the prior”) translates this text encoding into a corresponding image encoding, capturing the semantic essence of the prompt.

- Image Decoding: Finally, an image decoder stochastically generates an image that brings the text’s vision to life. In the world of AI, it’s all about linking textual semantics with visual representations—a match made in digital heaven!

- https://aws.amazon.com/what-is/stable-diffusion

- https://stabledifffusion.com/faq

- Roboflow Blog on Synthetic Data(https://blog.roboflow.com/synthetic-data-dall-e-roboflow/)

- https://www.diffusionai.art/what-is-stable-diffusion/?utm_source=chatgpt.com

- Generate Any Scene - University of Washington & Allen AI(https://generate-any-scene.github.io/)

Meet CLIP: The Ultimate Image-Text Wingman

CLIP (Contrastive Language-Image Pre-training)

is like that friend who always gets your jokes. It evaluates how well a caption fits an image by mapping both into a shared m-dimensional space and computing cosine similarities.

The training objective?

Maximize the similarity for the right pairs and minimize it for the wrong ones. CLIP ensures that your AI-generated art isn’t just pretty it’s contextually relevant too.

Prior Training and the DALL-E 2 Twist DALL-E 2 takes things a notch higher by integrating a modified GLIDE model with projected CLIP text embeddings. Here’s the backstage pass: Diffusion Prior: At its core is a decoder-only Transformer, which processes an ordered sequence including tokenized text, CLIP text encodings, diffusion timestep encodings, and noised image encodings from the CLIP image encoder. Mapping the Magic: The process begins with the CLIP text encoder mapping the image description into its representation space. The diffusion prior then maps this into a CLIP image encoding. Finally, the generation model uses reverse diffusion to create one of many possible images that perfectly encapsulate the semantic information in your caption.

Stable Diffusion: Efficiency Meets Elegance

While a full-sized 512×512 image boasts 786,432 pixels, Stable Diffusion works its magic on a compressed latent space of just 16,384 values—48 times smaller! This efficiency means you can run Stable Diffusion on a desktop with an NVIDIA GPU sporting a modest 8 GB of RAM. Thanks to the clever use of variational autoencoders (VAEs) in the decoder, even those fine details (like the twinkle in a digital eye) aren’t lost in translation.

A Quick Crash Course on Autoencoders & VAEs

Autoencoders are neural networks that learn to compress data into a simplified format and then reconstruct it with impressive accuracy. Throw in some probabilistic flair, and you have a VAE—short for Variational Autoencoder—which adds a bit of uncertainty (in a good way) to the mix. This probabilistic twist is exactly what enables models like Stable Diffusion to generate such high-quality images without requiring a supercomputer.

Wrapping It Up: Diffusion, Deduction, and Digital Delights

From the initial chaos of forward diffusion to the artful reconstruction of reverse diffusion, modern AI models are mastering the art of turning noise into nuance. Whether it’s through residual blocks, attention mechanisms, or clever text-to-image mappings, these techniques are pushing the boundaries of what’s possible in image generation.

So next time you marvel at a piece of AI-generated art, remember: behind every pixel lies a beautifully orchestrated process of diffusion, deduction, and a dash of digital magic. And hey, in the realm of AI, sometimes it really is best to go with the flow—after all, the only constant is change (and a little bit of noise)!

Expanding Possibilities with Synthetic Data

Synthetic data is a game-changer, helping AI models learn better by generating vast amounts of training samples.

Roboflow has introduced an API that integrates

DALL-E and GPT-4 Vision to create synthetic datasets for AI training. By leveraging a base image and AI-generated enhancements, this tool expands dataset variety, improving model accuracy and robustness. Pushing Boundaries: The Future of AI-Generated Images Researchers continue to push the limits of AI image generation.

Exciting developments include:

A self-improving framework that allows models to refine their outputs over time. Distillation techniques to transfer strengths from proprietary models to open-source alternatives.

Better content moderation by using synthetic data to detect and mitigate biases in AI models.

Wrapping It Up From the controlled chaos of diffusion models to the artistic finesse of DALL-E and the efficiency of Stable Diffusion, AI image generation has come a long way. Whether it’s for artistic creation, synthetic data production, or content moderation, these innovations are shaping the future of AI-driven visuals. Sources:

Stay tuned for more deep dives into the tech that’s reshaping our digital landscape. Until next time, keep it cool and let the good (diffused) vibes roll! (/cite: thank you GPT 4o for rephrasing and removing my dumb grammatical mistakes)